Figure 0: Installation view of Gestation, RMIT Gallery, 2000

Presence, Space, Experience

|

The audible domain provides an intuitive, experiential, visceral, instinctive, spontaneous and intimate perceptual habitat where the human body is central, where a visceral engagement with sonic architectures may challenge the western mind-body split that is deeply embedded in western music theory.

These characteristics allow the interactive sound environment to present a unique articulation of space and place, one in which the fluidity of the human body can be empowered to find new ways of engaging with the immediate environment. This unique and many faceted approach appoints space itself as a performative medium, instructing the user in new ways of listening, whilst simultaneously casting the listener as creator; a performative agent.

This paper approaches the interactive sound environment from the perspective of practice-led research, applying experiential feedback as a means of theorising developments in artistic practice with a predilection for the Kantian empiricists’ perspective. David Borgo discussed the dichotomies implicit in the distinctions between the doing and the knowing forms of knowledge. He claims that knowing “comprises static information structures within one’s head, and doing refers to the straightforward process of executing a given operation based on these pre-existing knowledge structures” (2005: 178). Where knowledge structures are pre-existent, this model is useful, however in the relatively new domain of interactive sound installations, existing theory is limited. Whilst I am working from the perspective of a musician/sound artist, musical theory (musicology or composition theory) is so entangled in a labyrinthine collection of ‘musical’ thought that it does not generally elucidate the experience of interactive, sonic immersion. Theories from new-media and electronic arts are commonly also too removed from the empirical, that they at best describe the framework in which the experience occurs. Discussions such as this paper therefore need to borrow theories from other disciplines despite giving precedence to the empirical whilst discussing the underlying design and production methods used to heighten the experience of a nuanced individual engagement within such an installation.

Introduction

Interactive sound environments are commonly characterised by an interface that allows the triggering of pre-made sonic material according to an X-Y coordinate location of an interactive agent in an architectural space, using either direct triggers (floor pads, light beams) or video tracking. The interactive agent may be a coloured object such as a ball or other everyday item, a person with a coloured hat or shirt, or simply a single contiguous mass, an un-identified body in the space. The sonic content of such installations is a collage of existing materials that contain fixed morphological structures, inherited when the sound samples were generated and recorded, and as such simply play themselves out, regardless of the unique gestural morphology of the person engaging with them. They provide an ability to collage material in ways that may vary according to interactive input, but they do not provide a direct relationship between the quality of the gesture and the sonic outcome, seeking instead to impart an immediate and readily accessible interface driven by gross gestural movement.

Throughout my own artistic endeavours in this area, I have sought to apply real-time sound synthesis in order to capture and reflect every minuscule nuance of gesture apparent to the input technologies. I have sought to refine this approach to provide a visceral and almost tactile response so that those interacting with the system feel that the quality of sound is directly related to both the inertia related to the mass of the limb they are moving and the mass of air disturbed with each gesture.

This approach has led to a number of technological and artistic strategies, including the adoption of dynamic morphology (Wishart 1996) to encourage variations in sonic timbre according to gestural input, and by extension, the application of dynamic orchestration (Paine 2004, 2002) within the synthesis engines developed for each installation.

Dynamic morphology and orchestration combined to amplify the uniqueness of outcome for each person. The aesthetic range is determined by the synthesis algorithms, however the nature of real-time synthesis is that many parameters may be used to produce very small and continuous variations in timbrel content, thereby encouraging the individual to fully engage with the quality of the environment and their immediate effect upon it and providing a very diverse array of possibilities within the timbre space of each instrument. When dynamic orchestration is applied, the aesthetic domain of audible synthesis resources multiplies these qualities.

To keep the system dynamic, it is crucial that all synthesis is real-time which by virtue of the interaction, institutes a streaming relationship rather than an event-based (state machine) paradigm and constitutes a causal loop in which the individual comes to consider the way in which the environment conditions their behaviour, and conversely the way in which their behaviour conditions the environment. Questions of preferential behaviour and preferential environment come into play in a manner that is not available within morphology of the sonic feedback is based on pre-recorded and triggered content. This paper discusses a number of works developed by the author and illustrates strategies that provide direct visceral and almost tactile engagement with what is otherwise a very abstract sonic and architectural space.

Space, Place and Presence

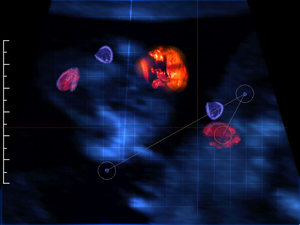

The text at the beginning of this essay describes entering the sound gallery of my interactive environment installation Gestation, which also contains a separate animation gallery, where animated foetuses grow – I will discuss Gestation in more detail later in this paper.

The text hints at a hypothesis, that we come to know space through body and movement, an examination in time - it takes time to move through space, to understand the relationships of and within space that through a myriad of complex sensual relationships provide us with a notion of place.

Sound is fundamentally a temporal medium – requiring enquiry and patience to unravel the intricate data contained therein. Psychoacoustics allows us to disentangle reverberation, discovering information about the size of the sonic environment. When reverberation is considered along with timbre and attack, the human mind can envision the source of the excitation and determine qualities pertaining to the surfaces and furnishings of the space (marble floors and heavy drapes…), the distance from the source, the force of the excitation gesture and often, aspects of intent, the accuracy and consistency of the bell strike for instance, the energy of purpose or hesitancy. This brings us back to morphology and as discussed earlier, the process of recording entrenches the morphology of an audio sample – always identical. This is fine for music CDs and sound effects where the sonic material serves to deliver an image or metaphor, however in an interactive installation, we quickly come to realise that our interactive intent does not condition the response and so the very nature of the interaction moves to an external reference – the rules of the game – rather than reflecting our experience, cognition.

This is all well and good, but a space is an abstract conception, and the information we divine about the source is about the ‘other’, the external, detached object of the minds eye. Within the context of this paper, our direct relationship with that space is of critical importance – how do we engage, experience and digest those qualities that our psychoacoustic skills yield? How does this corporeal perceptual awareness condition the manner in which we inhabit the space and engage with others therein? How does it determine the activities we engage in within that space (recording in a recording studio where the reverberation is kept to a minimum, providing a sense of control and containment, or singing in a cathedral where the reverberation time is long and the resulting vocal sound is thick, dense and surreal, hanging like the very spirit it proclaims in the air above our heads)?

This dilemma regarding the relationship between behaviour and environment is the foundation of my interactive installation art practice. The hustle and bustle of the modern city does not encourage such considerations. As skyscrapers, like goliath sundials, cast shadows in the streets, the wind is directed into amplified tunnels signifying the behemoths breath. Within all of this, the human space, the street, is noisy and dusty, leaving many feeling disempowered by the pressures of economic and financial constraints, population density, decisions of architects and city planners, and the self-esteem of the corporation, bigger, shinier, exclusive, alienating. As relief we consign areas of heavily manicured gardens to parks, conduits for memories of the source of life, the democracy of nature. Little wonder that we have so little real affinity with nature, that we so rarely consider the relationship between behaviour and environment at the human scale, our place in the system, our personal responsibility, and our individual power for change.

The Sonic Gestalt

Sound is a unique media, for unlike the visual, it is not an external artefact, it literally penetrates the body. Furthermore, it is almost impossible to tie it to a concrete representation of anything beyond a communication of emotional states or experience, something Denis Smalley (1986: 61-96) refers to in his levels of surrogacy. In line with Smalley, I have kept the sonifications in these works towards remote surrogacy, for when a listener recognises and identifies with a sound emanating from a loudspeaker, it is natural to draw upon their existing cultural and or environmental context for meaning. Even Smalley, whose theory of Spectromorphology concentrates on intrinsic features in electroacoustic music, acknowledges that “a piece of music is never an autonomous closed artefact” and that music relies on relating to external, cultural experiences for meaning (1986: 110). He says the extrinsic foundation in culture is necessary so that the intrinsic can have meaning. The intrinsic and extrinsic are interactive (Smalley 1997: 107-126). When the source or cause of a sound is not discernable, the listener may draw upon physiological and psychological links with the sound, taking them into the purely experiential and sensual domain, where musical gesture is proprioceptive and is concerned with the tension and relaxation of muscles with effort and resistance. In this way sound making is linked to more comprehensive sensorimotor and psychological experience (Smalley 1986: 61-96).

This is a great benefit to the sound artist/composer who by virtue of this fact is not tied to real-world representation in order to be judged successful, but must be mindful of sonic relationships.

The Immersive Environment

Arts practice in general is characterised by complexity, and reported through experience rather then objective measurement. Human experience is by nature abstract and multi-sensory; sound, sight, smell, touch and balance, each of which provides a complementary but diverse influence on the communication of a holistic perception.

The notion of an integrated body has been part of critical discourse for some time, but has, in my view never been fully understood in immersive environments where the path of exploration is un-constrained. Dualist theories subscribe to the well- established Cartesian mind/body split, which is still well represented in fixed media works on DVD and CD and much CD-ROM and online work that essentially offers content on demand, none of which demand a holistic immersion in experience. Much of this work caters to the mind, leaving the body behind. These positions are so embedded in our society, that Hans Moravec (1988) felt enabled to argued that the age of the protein-based life form was coming to an end, superseded by silicon-based life forms: forcing N. Katherine Hayles to retaliate that; “At the end of the twentieth century, it is evidently still necessary to insist on the obvious: we are embodied creatures” (Hayles 1996: 1-28).

The immersive, interactive environment installation promises the potential to experience ourselves as embodied creatures in a manner hitherto unknown. Art often seeks to provide opportunities to contemplate the transcendent, something that whilst abstract is always of the organic - N. Katherine Hayles also seeks to bring this to our attention when she comments on Moravec’s vision that: “Traditionally the dream of transcending the body to achieve immortality has been expressed through certain kinds of spiritualities. Dust to dust, but the soul ascends to heaven. Moravec’s vision represents a remapping of that dream onto cyberspace…”(1996: 1-28).

The cartography of cyberspace is essentially a removed abstract mental construct mediated by the screen, communicating experience through the visual cortex, but rarely engaging the body as a whole, the distributed, visceral register (Gromala 2003) and as such sets up a dualist construct. By contrast to the dualist theories, identist theories discover the physical system we are is conscious. In line with cybernetic theory the Identists’ see the whole as greater than the parts (Wiener 1948).

Diana Gromala reflects this perspective when she argues that consciousness and memory are distributed through the entire viscera. She is interested in:

The conditions that enable the visceral register to remain in a liminal state, between autonomic processes and conscious awareness, imminent reflection coupled with persistent, quivering flesh. [She asks] is it possible for the visceral perception to be sustained as a persistent recurrence, an infectious field, an open wound, and a compulsive disposition that exists before, beyond or beside habit? This examination of visceral perception and habit may lead, … to a deeper understanding of the transmogrifying fields of a corporeal perception we need for our evolving cultural condition (Gromala 2003: 10).

Gromala’s comments bring forth an extension of Humanist Psychology, expressing the state of mind, memory and experience as distributed through the entire viscera, not housed in the head, and certainly not separable from the body. This is an admirable extension of humanism and one I believe is critical to interactive design.

Hales’ observations above may seem passé, however they are not fully considered in most new media art and remain a critical imperative if we are to realise the potential of this developing area of artistic practice. When designing interactive, immersive environments, one must consider gestural interaction, that is, the manner in which the entire body engages with the work, the way in which that engagement is filtered as the interactor develops a cognitive map of their relationship to the environment. The relationships different classes of gesture have to the real-time sonification and animation in the space should encourage discovery and affirm the developing cognitive map.

Wanting to maximise corporeal perceptual awareness, it is useful to turn to a dancer for guidance, an artist whose raison d’être is the embodiment of experience. Thecla Schiphorst, (one of the original developers of the Life Forms choreographic software in collaboration with Merce Cunningham) has written widely about the body as interface in immersive environments. In describing the human bodies role as interactive agent, Schiphorst says:

I am interested in thinking what is body in relation to the construction of systems. I can describe the body as being fluid, re-configurable, having multiple intelligences, as being networked, distributed and emerging. …From my personal history and my own live performance experience I developed the notion of body knowledge and what I call ‘first person methodology’ and use this as a basis for interface design (Schiphorst 2001: 49-55).

Schiphorst paints a picture of the human body being deeply engaged with the act of interaction on many levels, being intuitive, visceral, corporeal and intelligent while exhibiting parallel processing features.

Indeed, I posit that when walking into an interactive, immersive environment, the first moment of interaction is a profoundly intuitive, corporeal one (Paine 2004: 80-86). A relationship is established as soon as one notes that the environment changed on your entrance. That change is an invitation to explore, and an acknowledgement that you are immediately a part of an intimate causal loop. The patterns of relationship in an interactive, immersive environment are made explicit and coherent through many iterations of the closed causal loop. Each one rendering with greater detail the nature of the relationship. The user/inhabitant of the interactive, immersive environment installation develops a cognitive map of the responses of the installation, testing their map through repeated exploration, confirming prior experience, and actively engaging in the evolution of the ecosystem of which they have become part.

One of the pioneers of interactive arts, the American video, and responsive environment artist Myron Krueger (1976) expresses a similar sentiment when discussing his early interactive video works:

In the environment, the participant is confronted with a completely new kind of experience. He is stripped of his informed expectations and forced to deal with the moment in its own terms. He is actively involved, discovering that his limbs have been given new meaning and that he can express himself in new ways. He does not simply admire the work of the artist; he shares in its creation (Krueger 1976: 84).

The shift from spectator to creator is a profound one, that will be welcomed by some and rejected by others, for as Krueger indicates, the experience of engaging in a responsive environment requires an active commitment to each instant, where every moment of engagement contributes to the creation of the art-work itself. The participant does not have the option of assuming the role of detached spectator; they are inherently part of the process, part of the artwork, the instrument itself – a substantial responsibility that the gallery visitor would never have expected in the past and the artist would most likely have been reticent to give up.

Gesture as Relationship

My interactive immersive environments use human gesture, movement and deduced behaviour patterns to form a relationship with the interactive agent, the interactor. The decision to use gesture as the primary means of engagement was to ensure the manifestation of the kind of dynamic and active engagement outlined above. I desired the relationships between the sonification, visualisation and the interactor to be many, subtle and complex; to define multifaceted simultaneous layers of engagement. Furthermore, although most do not like to dance in public, we inherently understand the relationship of gesture to communication, and have embodied a profound subconscious awareness of weight of gesture and inferred relationships asserting intimacy, inclusiveness, frailty, and fineness, through small gestures, contrasting with dynamism, commitment, exclusion and possible anger being understood from large, energetic gestures. I also suggest that each person has a unique vocabulary of gesture, a ‘body language’that if read with sufficient detail brings into being an individual sonic signature.

Musical Gesture

Within an interactive immersive environment, the morphology of gestural interaction will be carefully mapped to sonic or musical gesture. Notions of musical gesture are not new; Professor Robert Hatten suggests that:

Musical gesture is biologically and culturally grounded in communicative human movement. Gesture draws upon the close interaction (and intermodality) of a range of human perceptual and motor systems to synthesize the energetic shaping of motion through time into significant events with unique expressive force (Hatten 2003).

Hatten begins and ends by suggesting that musical gesture is biological, and as such that the human gesture, central to musical production, is mellifluous, viscous, and fluid, that it is not made up of individual events, but rather a contiguous movement that has form, shape, structure and duration, and that beyond that:

Musical gestures are emergent gestalts that convey affective motion, emotion, and agency by fusing otherwise separate elements into continuities of shape and force (2003).

The characteristics of affective emotion and agency are central considerations when designing sonification and visualisation strategies that express, through the interactive process, the intent of the gestural engagement. As discussed above, sound is an excellent medium for this communication, so the trick is analysing the gesture in such a way as to determine qualities of intent (affective emotion and agency, and visceral perception), for without this initial analysis, it is impossible to reflect the interactor’s expression back to them, to start a dialogue, or to authentically acknowledge their presence and innate capacity within the microcosm that is the interactive immersive environment.

The Works

This exploration of corporeal perceptual awareness has evolved over a series of interactive, immersive environment installations:

1. Moments of a Quiet Mind (Linden Gallery 1996)

2. Ghost in the Machine (Linden Gallery 1997)

3. Map1 (Span, Next Wave Festival 1998, Musical Instrument Museum, Berlin 1999)

4. Map2 (Musical Instrument Museum, Berlin 1999/2000, Tasmanian School of Art 2004)

5. Gestation (RMIT Gallery 2002, Florida State Museum 2003, Dublin (MediaLab EU) 2003, New York Digital Salon 2004)

Figure 1: Dr Garth Paine in MQM

The early works, Moments of a Quiet Mind (MQM) and Ghost in the Machine used pre-made sound files (198 different sound files, composed in rising intensity, with sound file length being reduced with intensity), floor pad triggers and light beams.

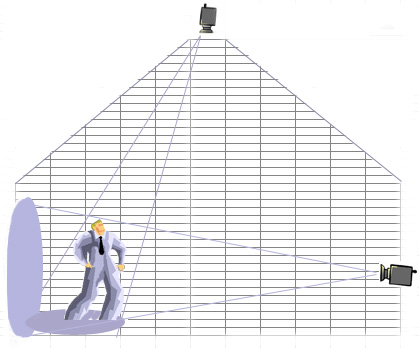

For MAP1, MAP2 and Gestation, I moved to video tracking, a single camera in the centre of the roof, with the exception of MAP2, for which I used two cameras mounted so as to create a three-dimensional tracking matrix. Video tracking allowed the environment to have a persistent relationship with those within it, to map presence as well as gesture, amplifying an awareness of immersion, that the interactor was immediately a part of an intimate causal loop, an immersive ecosystem, no longer the ‘player’, addressing the space as ‘other’, but part of the whole – no area out of view, no movement or gesture opaque.

Due to the persistent relationship established using video tracking, real-time synthesis could occur, mapping every small nuance of the gestural articulation of space into sound and or image. This heralded a much more dynamic system that acknowledged the uniqueness of each persons engagement.

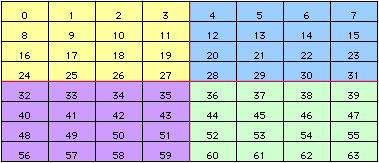

Figure 2: Images of MAP2 in the Musical Instrument Museum, Berlin MAP2

MAP2 was commissioned by the Staatliches Institut für Musikforschung (State Institute for Music Research - SIM), Berlin as part of the Festival of Culture (KUNSTFEST) for the millennium. It was developed in collaboration with Dr Ioannis Zannos at SIM and exhibited at the Musical Instrument Museum in Berlin 1999-2000.

Like the works that preceded it, MAP2 explored the interaction between human movement and the creation of music. It undertook to create an environment in which people could consider the impact they make on their immediate environment and the causal loops that exist between behaviour and quality of environment. Our personal aesthetic leads to decisions about preferential behaviour patterns and in turn preferential environmental qualities; one conditions the other.

In an effort to move fully into real-time synthesis and to elevate gestural morphology to top priority, MAP2 used two cameras to provide a full three-dimensional mapping of gesture and behavioural patterns. The vertical and horizontal planes assumed different roles with the horizontal being divided into four zones (see Figure 3), each of which tracked the x-y position of activity within them. Independent polyphonic articulation of the sonic environment became available, making collaborative sonifications possible. In line with the three-dimensional tracking, the vertical plane was segmented to place some sonification options above head height, encouraging a wider range of exploratory gestures.

Figure 3: MAP2 Horizontal camera analysis grid

The video tracking was done using two small CCTV cameras and the Very Nervous System (VNS) (Rokeby 1986). The VNS data takes the form of an array of integers, one per defined region, in order from the top left corner to the bottom right corner, it was necessary to gather the regions associated with each zone into a new array. A Mapdata class was defined in SuperCollider (McCartney 1996) that contained methods to collect the zone data into separate arrays as follows:

zone1 = #[0, 1, 2, 3, 8, 9, 10, 11, 16, 17, 18, 19, 24, 25, 26, 27].collect({ arg i; cam1kr.at(i).poll; });

zone2 = #[4, 5, 6, 7, 12, 13, 14, 15, 20, 21, 22, 23, 28, 29, 30, 31].collect({ arg i; cam1kr.at(i).poll; });

zone3 = #[36, 37, 38, 39, 44, 45, 46, 47, 52, 53, 54, 55, 60, 61, 62, 63].collect({ arg i; cam1kr.at(i).poll; });

zone4 = #[32, 33, 34, 35, 40, 41, 42, 43, 48, 49, 50, 51, 56, 57, 58, 59].collect({ arg i; cam1kr.at(i).poll; });

Figure 4: SuperCollider Code for separation of VNS data into region arrays

Each zone could then be analysed and sonified independently making it possible for a total of sixteen instruments (four per quarter, introduced on the basis of increasing thresholds of movement) and eight filters (two per quarter, introduced on the basis of increasing thresholds of movement) to be commanded independently but simultaneously. Each of the quadrants therefore contained a total synthesis resource of three instruments and two filters. The filters were enacted by a combination of positional and threshold data and were used to create small modulations of the sonic tapestry in response to diminutive nuances of gesture. This provided a wide range of modulation for each instrument layer. Dynamic orchestration was incorporated into the sound design by adding instruments on the basis of threshold of activity in each quadrant. As stated above, four instruments were made available within each quarter of the horizontal space, each active within a different activity range, so that the sonic possibilities increased with an increase in gestural dynamic. When the breadth of the filtering outcomes are considered, this design allowing a flexible and mellifluous timbrel variation based on the dynamic and position of gestures within each zone. A careful aesthetic evolution was mapped so that the layers equated to the increasing inertia and air mass related to the gestures as discussed above. The ‘orchestra’ consisted of 4 sonic layers (instruments), for which general descriptions follow:

1. Layer01 consisted of a drone like sound that was constantly being modulated by resonant filters as outlined above. This was the only sound audible when the installation was uninhabited and, in that state, the resonant frequencies slowly changed. This layer responded more dynamically when engaged interactively, because the interactive process then drove the filters.

2. Layer02 was a more dynamic and more defined sound. It moved through greater pitch ranges and its filter outputs changed more dramatically in relation to interactive input within its threshold range.

3. Layer03 was a bubbly, bouncy sound that used a varying echo to accentuate the dynamic of activity that was needed to add this layer to the orchestration.

4. The vertical active space (camera02) was set at just above head height and defined a row through the middle of the installation space that was mapped to play a physically modelled guitar sound.

Figure 5: Video tracking camera set-up in MAP2

The key to this sound design was an increase in the internal complexity of the sounds as associated with increased gestural intensity, and a constant variation in the filtered timbre with all gestural activity. The dynamic orchestration and dynamic filtering produced a very fluid, mellifluous sound environment, one in which the space itself became a flowing graceful medium that could be sculpted into amorphous forms, swept clean and reconfigured at will. The immediacy of this approach was augmented with an eight-channel sound spatialisation (one stereo pair per quater) that was designed so that sound layers independently follow gestures, creating a sense of characterisation in the sound where lighter sounds would come towards you when the dynamic of gesture was light and heavier sounds would rush off in the direction of the gesture. It was possible to create the effect of passing the sound from one zone to another. Users reported that the spatialisation enhanced the immersive experience, heightening corporeal perceptual awareness and provided a visceral, almost tactile articulation of place.

Figure 6: Image of the animation gallery in Gestation (RMIT Gallery 2000)

Conclusion

In this paper I have articulated the basis of my interactive, immersive environment installation practice; sought to explain why the Cartesian mind-body split is inoperable when the inhabitant is engaged at a deeply visceral, corporeal level, and attempted to express the potential, experiential environments have for the development of individual interactive expression. In so doing I have cited a number of practitioners whose work address embodiment, but I have done so in the context of interactive music systems with a particular focus on developing experiential media. It is enlightening then to see how close the thoughts of Professor Robert Hatten are to those of N. Katherine Hayles. Both remind us of the organic and deeply embedded nature of being fully aware in every moment, illustrating with support also from Schiphorst and Krueger the shift that occurs within an interactive immersive environment from the spectator to the creator. With this move from a relatively passive to active agent, the interactive immersive installation designer has to consider multi-sensory nature of experience and engage; sound, sight, touch and balance and possible smell in a manner that acknowledges the nuance of engagement of each individual, honouring their commitment to exploring and learning the interactive potential of the artwork.

The relationship between gestural articulation of space, the interactive response and the evolution of a sense of place is explicit in the work I have presented. The cybernetic closed-causal loop defines this relationship in a true “whole is greater than the sum of the parts” manner. In 1948, Norbert Wiener excitedly pointed out that life is an emergent condition (Bermudez 1999, Gromala 2003). Julio Bermudez eloquently describes the nature of living interfaces:

A close scrutiny of life reveals that living creatures are not material entities separated from their surroundings but rather regulatory interfaces of interactions occurring between their internal and external environments. Life is an emergent condition whenever and wherever certain complex internal and external tensions meet one another and find some dynamic balance (Bermudez 1999: 16)

My interactive immersive installations attempt to take this notion as their foundation and address the body as interface (Scott 1999). Perhaps we have moved past the Cartesian paradigm into a new, wholesome and immersive sense of presence, space and place? But in order for enquiry to have meaningful outcomes that persevere, we must remember that we remain embodied creatures (Hayles 1996).

References

Bermudez, J. (1999) Between Reality and Virtuality: Towards a New Consciousness? in Reframing Consciousness, ed. R. Ascott, Exeter: Intellect

Borgo, D. (2005) Sync or Swarm: Improvising Music in a Complex Age, New York: Continuum

Gromala, D. (2003) “A Sense of Flesh: Old habits and Open Wounds”, Consciousness Reframed 5 (CAiiA-Star), University of Wales College, Newport

Hatten, R. (2003) Course Notes: Musical Gesture: Theory and Interpretation, http://www.indiana.edu/~deanfac/blfal03/mus/mus_t561_9824.html, accessed June 24, 2003

Hayles, N.K. (1996) Embodied Virtuality: Or How To Put Bodies Back Into The Picture” in Immersed In Technology: Art and Virtual Environments, eds. M.A. Moser and D. MacLeod, Massachusetts: MIT Press

Krueger, M. (1976) Computer Controlled Responsive Environments, PhD dissertation, University of Wisconsin, Madison

McCartney, J. (1996) SuperCollider, 1996, http://www.audiosynth.com/, accessed February 16, 2007

Moravec, H. (1988) Mind Children: The Future of Robot and Human Intelligence, Cambridge: Harvard University Press

Paine, G. (2004) “Gesture and Musical Interaction: Interactive Engagement Through Dynamic Morphology”, Proceedings of the 2004 Conference on New interfaces For Musical Expression, June 3-5, Hamamatsu, Japan, Shizuoka University of Art and Culture, pp. 80-86

Rokeby, D. (1986) Installations: Very Nervous System (1986-1990), http://homepage.mac.com/davidrokeby/vns.html, accessed February 16, 2007

Schiphorst, T. (2001) Body, Interface, Navigation Sense and the State Space in The Art of programming: Sonic Acts, Amsterdam: Sonic Acts Press

Scott, J. (1999) The Body as Interface in Reframing Consciousness, ed. R. Ascott, Exeter: Intellect

Smalley, D. (1986) Spectro-morphology and Structuring Processes in The Language of Electroacoustic Music, ed. S. Emmerson, New York: Macmillan

Smalley, D. (1997) “Spectromorphology: Explaining sound-shapes” in Organised Sound vol. 2 no.2 pp. 107-126

Wiener, N. (1948) “Cybernetics”, Massachusetts: MIT Press

Wishart, T. (1996) “On Sonic Art”, ed. S.Emmerson, Philadelphia: Harwood