Introduction

Music is known as the most abstract of the arts – referring only to its own world of melody, harmony, dissonance, and resolution. Nonetheless, some composers have sought to represent the world outside of music in musical form, with well known examples such as Vivaldi’s Four Seasons and Beethoven’s Pastoral symphony. In the early twentieth century Russolo’s “Manifesto on the Art of Noises” extended music from the classical orchestra to include the sounds of traffic and industry and so reference the world beyond the concert hall. Today’s audio technology has made it possible to reference the world even further through sampling in works such as Matthew Herbert’s “The Truncated Life of a Modern Industrialised Chicken” composed from recordings of 10,000 battery hens. Electronic technology such as the Geiger counter that clicks in response to invisible and deadly radiation has also made it possible to listen to things that do not naturally make sounds. The invention of the Musical Instrument Digital Interface (MIDI) in 1982 enabled researchers to connect computers to pop-music synthesisers and to listen to galaxies, chemical reactions, mathematical equations and any other data from scientific and engineering experiments. At the first meeting of the International Community for Auditory Display (ICAD) in 1992, Carla Scaletti provided a working definition of sonification as “a mapping of numerically represented relations in some domain under study to relations in an acoustic domain for the purpose of interpreting, understanding or communicating relations in the domain under study [Scaletti, 1994]. Since then the definition has been broadened and simplified to the use of “non-verbal sounds to convey useful information” [Barrass, 1998] which is now the generally accepted form [Kramer et. al., 1999]. This less technical definition points to reasons for sonification, leaving “useful” open to a the much broader range of activities that are the subject of sonification today, such as biofeedback of gesture and posture, mobile wayfinding and audio branding.

At the first ICAD meeting Sarah Bly made the comment that “the lack of a theory of sonification is a gaping hole impeding progress in the field” [Bly, 1994]. The developments in audio technologies have increased the range of options available for sonification but there has not been a commensurate development in theory. At that same meeting Sheila Williams proposed that the (then) new theory of human hearing called Auditory Scene Analysis (ASA) could provide a foundation for sonification [Williams, 1994]. This theory describes the perception of auditory “streams” that are similar in concept to visual objects [Bregman, 1990]. However the dominant design pattern in sonification continues to be the musical metaphor embodied by the MIDI protocol comprised of instrument channels that vary in pitch and loudness. This paper investigates an alternative stream-based model based on the perception of auditory figure/grounds rather then instrument channels. This model is developed through a series of case studies that integrate the theory of Auditory Scene Analysis into sonification. The next section presents the four case studies that explore and develop the practical application of theory in sonification. The section after that describes the Stream-based Sonification Diagram that encapsulates the knowledge from the case studies in a way that can be applied to a general range of task and data types. The final section provides a summary of this research in terms of its wider significance and opens questions for future directions.

Case-Studies

This section presents four case studies that identify and develop the concept of stream-based sonification: The ImageListener and Galloping Loops; Dirt/Gold and Figure/Ground; Dissonance and Streaming; and Virtual Geiger Counter and Simultaneous Streams.

ImageListener and Galloping Loops

The sonification, The ImageListener” and “Galloping Loops, was developed to support the analysis of changes in the environment captured by satellite imagery. It was presented as a case study at the International Conference on Auditory Display in 1996 [Barrass, 1996]. The data consists of the difference in the reflectivity of the ground in satellite images taken twenty years apart. An analyst can click on a visualization of this dataset to hear the sonification of the change at a particular point using the ImageListener, shown in Figure 1.

Figure 1. ImageListener interface showing change in land cover in Canberra

It was difficult to compare differences between different points from the image due to the small size of individual pixels. However the sonification did not solve this problem because it was not easy to remember the sound at the previous point while clicking on another. This problem was solved by looping the sonifications of the two points, which made comparisons much easier. Similar values produced a sound that was distinct from those that had greater differences. These alternating looped points sounded quite similar to the Van Noorden gallop that is used in experiments on auditory stream perception [Van Noorden, 1977]. This observation laid the foundation for further exploration of the Van Noorden effect in the following case studies. The ImageListener also raised questions about auditory memory related to sonification. How long can we hold a sound in memory? Is it possible to compare two sounds in memory? What is the effect of time on the judgment of difference between two sonified data points? How many levels of quantitative difference can be judged between two sonified points?

Dirt/Gold and Figure/Ground

Dirt/Gold and Figure/Ground was a sonification designed in response to Bly’s challenge to classify gold from dirt in soil samples that cannot be represented in less than six dimensions [Bly, 1994]. The application of the theory of Auditory Scene Analysis in the design of this sonification was described in a chapter in the Csound Book [Barrass 1998]. The sonification was realised using a six parameter FM algorithm that produces a rich non-linear space of timbres that range from vibrating chirps and bell like dongs to deep gurgles and noisy drones. The data points in the gold category were mapped to brighter timbres, while the dirt category were mapped to duller deeper timbres. The Van Noorden gallop was used to ensure that the gold data perceptually segregated from the dirt. Ten points from the gold category were alternated with ten points from the dirt category and played at a rate of 5 tones per second (an intertone onset interval (IOI) of 200ms). The parameters of the FM algorithm were adjusted to maximise the segregation between gold and dirt categories. Twenty-seven subjects between the ages of 20 and 60 were asked to classify 30 random points from the soil dataset. The subjects could take as long as they liked with as many repetitions as they needed. The results show a 74% success rate that is significantly better than chance (p < 0.01) [Barrass, 1998]. In further development the use of the Van Noorden effect to scale the FM parameter space also pointed to the potential to listen to more than one point at a time. This led to an experiment in which 1000 random points of gold and dirt were played back at a rate of 10 points per second to produce a 100 second long granular synthesis texture. The sonification gave an impression of the distribution and quantity of gold that stood out as brighter figures against the duller background of the dirt. This was a significant development because it demonstrates a way that stream-based sonifications may allow answers to questions about a dataset as a whole based on figure/ground streams.

The Dirt and Gold case study raises the question of whether the Van Noorden gallop could be used to perceptually scale a general range of timbre spaces. Other questions emerging from this case study include: How well can listeners judge statistical information about the distribution of two categories from a granular stream-based sonification? What is the relation between playback rate and perception of statistical information?

Dissonance

The sonification, Dissonance and Streaming, was designed for an article that compared three different approaches to the sonification of the same data-set in the IEEE Journal of Intelligent Systems [Hearst, 1997]. The scenario involved monitoring the break down of a ship turbine system over an eight minute period. The data-set consisted of a timeline of values from sensors in various parts of the system that were trending towards dangerous levels at various times and rates. The sonification design was a further extension of the Van Noorden gallop used in previous case-studies. In this instance the gallop consisted of three reference tones interleaved with four tones that summarised turbine sub-systems. In normal operation the reference tones produce a regular beep-beep-beep figure that stands out against the lower and more variable background of the subsystem tones. As a subsystem variable moves towards a dangerous level the related tone begins to group with the reference figure and disrupts the regularity to produce a different rhythm. The 25 possible variations in the figure are not pre-ordained to signal specific states, but emerge from the relations between the variables. The reference figure becomes increasingly insistent and dominant as more subsystems group with it, until it sounds like a conventional alarm in the last minutes of the emergency.

The Dissonance and Streaming case study is significant because it develops a way to monitor continuous information about a system that is not based on pre-specified alarm triggers. An awareness of the continuous state of the system may enable the operator to predict and prevent a problem before it reaches an emergency situation. The emergent sonification is specific to the system and different examples of the same system will sound similar but different. The operator may pre-learn important general states but over time more specific meanings may emerge with experience with a particular system.

Some questions for further investigation coming out of this case study include: How many variables can be monitored with this figure/ground interleaving technique? How effective is this technique for the ambient awareness of a system for periods of an hour, a day, a week or a month? How many specific states or conditions can be recognized with this technique, and how long do they take to learn?

Virtual Geiger counter

The Virtual Geiger-Counter is an interactive sonification designed for the VRGeo research consortium on Virtual Reality for Oil and Gas exploration [Barrass and Zehner, 2000]. The Geiger counter sound is familiar to most seismic interpreters who recognize the way the clicking varies with changes in radiation. One of the expert interpreters used this sonification to confirm expected changes in radiation in the 3D visualization of geological strata. The interpreters were also interested in the way that other variables, such as porosity, chemicals, and conductance vary at the same time. In short trials over a series of meetings the interpreters quickly understood the extension of the Virtual Geiger counter to other variables. The need to understand relative changes led to the suggestion to sonify more than one variable at the same time. In the next version the generic clicking noise was replaced with timbre samples that segregated at intertone onset intervals (IOI) of 200 ms when played in a Van Noorden gallop. This stream segregation increases at faster rates of clicking caused by higher levels of radiation and other variables. The data values were then used to control a filter that affected the spectral centroid of the timbre grains. Uncorrelated variables produce two distinctly separate Geiger counter streams with different timbres. Correlated values merge to produce a single Geiger stream with increased clicking density. This dynamic merging from two to one stream based on the relations in the data is significant because it distinguishes stream-based sonification from conventional channel-based sonifications that assume perceptual independence between auditory channels.

Figure 2. The Virtual Geiger-Counter

Further questions for empirical study arising from this case study relate to the accuracy of judgments with the Virtual Geiger-counter: How does it compare with visual scatterplots ? Can relations between more than two variables be understood in this way?

Stream-based Sonification Diagram

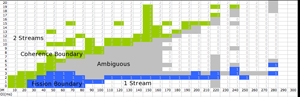

The four case studies elaborated here identified and developed the concept of stream-based sonification. However the sonification in each case study is specific to a particular task and data. This leads to the question of whether there is a way to extend the stream-based techniques developed in these studies to a general range of tasks and data. The Van Noorden gallop is the common feature that provides the basis for the design of streaming in each case. Van Noorden used this psychoacoustic effect to map coherence and fission boundaries that predict whether a listener will hear one or two streams from a particular stimulus [Van Noorden, 1978]. The Stream-based Sonification Diagram, shown in Figure 4, is an adaptation of the Van Noorden Diagram to sonification [Barrass and Best, 2008]. The focus in the region from IOI of 100ms to 200ms where the coherence and fission boundaries are close together provides for the predictive control of whether a listener will hear one or two streams from a particular data relation.

Figure 3. Stream-based Sonification Diagram for the Brightness of a 30ms Noise Grain [Click for larger version]

Four of these Stream-Based Sonification Diagrams have been produced through listening experiments. The first is a repetition of the original Van Noorden Diagram at higher temporal resolution in the Stream-based Sonification region. The other three are new plots of coherence and fission boundaries for brightness, amplitude and pan of a noise. These plots show that streaming by brightness and pitch are closely related. They also show a coherence boundary for streaming by amplitude that has not been reported before, and that streaming by spatial panning is relatively unaffected by IOI. The Stream-based Sonification Diagram is significant because it provides a predictive basis for design decisions, and integrates the theory of Auditory Scene Analysis into sonification.

Conclusion

The advances in audio technology since the first conference on Auditory Display in 1992 have provided an increasingly powerful and diverse array of techniques for conveying information in sound. At the same time the advances in the theory of sonification have not kept pace, and the gap between theory and practice has widened rather than narrowed. This paper bridges the gap between the technology and theory by integrating Auditory Scene Analysis as a theoretical basis for designing sonifications. The channel-based MIDI model that technically embodies the metaphor of a musical group or orchestra has been the dominant pattern in sonification. The case studies described in the paper explore and develop a figure/ground metaphor based on perceptual streams rather than musical instrument channels. The iterative series of practical case studies is an example of design research that integrates an existing theory into a new field of practice. The knowledge developed through this research process is encapsulated in a general form in the Stream-based Sonification Diagram. This diagram provides a scientific way for a designer to predict perceptual effects in a sonification. The case studies also raised many new questions for further empirical investigation. In further work these investigatons will contribute towards the development of theories specific to sonification.

References

Barrass S. (1998) Auditory Information Design, Doctoral Dissertation, Australian National University, available online from Australian Digital Theses.

Barrass S. (1998) Some Golden Rules for Designing Auditory Displays. In: Boulanger R (ed) Csound Textbook – Perspectives in Software Synthesis, Sound Design, Signal Processing and Programming, MIT Press.

Barrass S. and Best G. (2008) Stream-based Sonification Diagrams, in Proceedings of the International Conference on Auditory Display, Paris, France, June 24-27, 2008.

Barrass S. and Zehner B. (2000) Responsive Sonification of Well-logs. In Proceedings of the International Conference On Auditory Display, Atlanta, USA, April 2-5, 2000.

Barrass S. (2009) Online Portfolio, http://stephenbarrass.wordpress.com/, retrieved 17 September 2009.

Bly S. (1994) Multivariate Data Analysis, in Kramer G. (ed) Auditory Display: Sonification, Audification and Auditory Interfaces, SFI Studies in the Sciences of Complexity, Proceedings Volume XVIII, Addison Wesley Publishing Company, Reading, MA, U.S.A.

Bregman A.S. (1990) Auditory Scene Analysis, The MIT Press.

Hearst M.A. (1997) Dissonance on Audio Interfaces, IEEE Journal of Intelligent Systems, vol. 12, no. 5, pp. 10-16, Sept. 1997.

Kramer et. al. (1999) Sonification Report: Status of the Field and Research Agenda, Prepared for the National Science Foundation by members of the International Community for Auditory Display, http://www.icad.org/websiteV2.0/References/nsf.html

Scaletti C. (1994) Sound Synthesis Algorithms for Auditory Data Representations, in Kramer G. (ed) Auditory Display, Sonification, Audification and Auditory Interfaces, SFI Studies in the Sciences of Complexity, Proceedings Volume XVIII, Addison-Wesley Publishing Company, Reading, MA, U.S.A.].

Van Noorden L.P.A.S. (1977) Minimum Differences of Level and Frequency for Perceptual Fission of Tone Sequences ABAB, The Journal of the Acoustical Society of America, vol. 61, no. 44, pp. 1041-1045, 1977.

Williams S. (1994) Perceptual principles in sound grouping. In Auditory Display: Sonification, Audification and Auditory Interfaces, G. Kramer, Ed. SFI Studies in the Sciences of Complexity, Proceedings vol. XVIII. Addison-Wesley, Reading, MA.